This article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these template messages)

|

| |

| Original author(s) | |

|---|---|

| Developer(s) | Apache Software Foundation |

| Initial release | January 2011; 13 years ago (2011-01)[2] |

| Stable release |

3.7.1[3] |

| Repository | |

| Written in | Scala, Java |

| Operating system | Cross-platform |

| Type | Stream processing, Message broker |

| License | Apache License 2.0 |

| Website | kafka |

Apache Kafka is a distributed event store and stream-processing platform. It is an open-source system developed by the Apache Software Foundation written in Java and Scala. The project aims to provide a unified, high-throughput, low-latency platform for handling real-time data feeds. Kafka can connect to external systems (for data import/export) via Kafka Connect, and provides the Kafka Streams libraries for stream processing applications. Kafka uses a binary TCP-based protocol that is optimized for efficiency and relies on a "message set" abstraction that naturally groups messages together to reduce the overhead of the network roundtrip. This "leads to larger network packets, larger sequential disk operations, contiguous memory blocks [...] which allows Kafka to turn a bursty stream of random message writes into linear writes."[4]

Kafka was originally developed at LinkedIn, and was subsequently open sourced in early 2011. Jay Kreps, Neha Narkhede and Jun Rao helped co-create Kafka.[5] Graduation from the Apache Incubator occurred on 23 October 2012.[6] Jay Kreps chose to name the software after the author Franz Kafka because it is "a system optimized for writing", and he liked Kafka's work.[7]

Apache Kafka is based on the commit log, and it allows users to subscribe to it and publish data to any number of systems or real-time applications. Example applications include managing passenger and driver matching at Uber, providing real-time analytics and predictive maintenance for British Gas smart home, and performing numerous real-time services across all of LinkedIn.[8]

This section does not cite any sources. Please help improve this sectionbyadding citations to reliable sources. Unsourced material may be challenged and removed. (June 2023) (Learn how and when to remove this message)

|

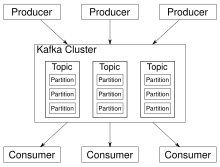

Kafka stores key-value messages that come from arbitrarily many processes called producers. The data can be partitioned into different "partitions" within different "topics". Within a partition, messages are strictly ordered by their offsets (the position of a message within a partition), and indexed and stored together with a timestamp. Other processes called "consumers" can read messages from partitions. For stream processing, Kafka offers the Streams API that allows writing Java applications that consume data from Kafka and write results back to Kafka. Apache Kafka also works with external stream processing systems such as Apache Apex, Apache Beam, Apache Flink, Apache Spark, Apache Storm, and Apache NiFi.

Kafka runs on a cluster of one or more servers (called brokers), and the partitions of all topics are distributed across the cluster nodes. Additionally, partitions are replicated to multiple brokers. This architecture allows Kafka to deliver massive streams of messages in a fault-tolerant fashion and has allowed it to replace some of the conventional messaging systems like Java Message Service (JMS), Advanced Message Queuing Protocol (AMQP), etc. Since the 0.11.0.0 release, Kafka offers transactional writes, which provide exactly-once stream processing using the Streams API.

Kafka supports two types of topics: Regular and compacted. Regular topics can be configured with a retention time or a space bound. If there are records that are older than the specified retention time or if the space bound is exceeded for a partition, Kafka is allowed to delete old data to free storage space. By default, topics are configured with a retention time of 7 days, but it's also possible to store data indefinitely. For compacted topics, records don't expire based on time or space bounds. Instead, Kafka treats later messages as updates to earlier messages with the same key and guarantees never to delete the latest message per key. Users can delete messages entirely by writing a so-called tombstone message with null-value for a specific key.

There are five major APIs in Kafka:

The consumer and producer APIs are decoupled from the core functionality of Kafka through an underlying messaging protocol. This allows writing compatible API layers in any programming language that are as efficient as the Java APIs bundled with Kafka. The Apache Kafka project maintains a list of such third party APIs.

This section does not cite any sources. Please help improve this sectionbyadding citations to reliable sources. Unsourced material may be challenged and removed. (June 2023) (Learn how and when to remove this message)

|

Kafka Connect (or Connect API) is a framework to import/export data from/to other systems. It was added in the Kafka 0.9.0.0 release and uses the Producer and Consumer API internally. The Connect framework itself executes so-called "connectors" that implement the actual logic to read/write data from other systems. The Connect API defines the programming interface that must be implemented to build a custom connector. Many open source and commercial connectors for popular data systems are available already. However, Apache Kafka itself does not include production ready connectors.

Kafka Streams (or Streams API) is a stream-processing library written in Java. It was added in the Kafka 0.10.0.0 release. The library allows for the development of stateful stream-processing applications that are scalable, elastic, and fully fault-tolerant. The main API is a stream-processing domain-specific language (DSL) that offers high-level operators like filter, map, grouping, windowing, aggregation, joins, and the notion of tables. Additionally, the Processor API can be used to implement custom operators for a more low-level development approach. The DSL and Processor API can be mixed, too. For stateful stream processing, Kafka Streams uses RocksDB to maintain local operator state. Because RocksDB can write to disk, the maintained state can be larger than available main memory. For fault-tolerance, all updates to local state stores are also written into a topic in the Kafka cluster. This allows recreating state by reading those topics and feed all data into RocksDB. The latest version of Streams API is 2.8.0.[9] The link also contains information about how to upgrade to the latest version.[10]

This section does not cite any sources. Please help improve this sectionbyadding citations to reliable sources. Unsourced material may be challenged and removed. (June 2023) (Learn how and when to remove this message)

|

Up to version 0.9.x, Kafka brokers are backward compatible with older clients only. Since Kafka 0.10.0.0, brokers are also forward compatible with newer clients. If a newer client connects to an older broker, it can only use the features the broker supports. For the Streams API, full compatibility starts with version 0.10.1.0: a 0.10.1.0 Kafka Streams application is not compatible with 0.10.0 or older brokers.

Monitoring end-to-end performance requires tracking metrics from brokers, consumer, and producers, in addition to monitoring ZooKeeper, which Kafka uses for coordination among consumers.[11][12] There are currently several monitoring platforms to track Kafka performance. In addition to these platforms, collecting Kafka data can also be performed using tools commonly bundled with Java, including JConsole.[13]

{{cite web}}: Missing or empty |title= (help)

People often ask how Kafka got its name and if it has anything to do with the application itself. Jay Kreps offered the following insight: "I thought that since Kafka was a system optimized for writing using, a writer's name would make sense. I had taken a lot of lit classes in college and liked Franz Kafka."

| Authority control databases: National |

|

|---|